- Steps to install spark on windows 10 for jupyter notebook how to#

- Steps to install spark on windows 10 for jupyter notebook update#

- Steps to install spark on windows 10 for jupyter notebook software#

- Steps to install spark on windows 10 for jupyter notebook download#

Steps to install spark on windows 10 for jupyter notebook how to#

Steps to install spark on windows 10 for jupyter notebook download#

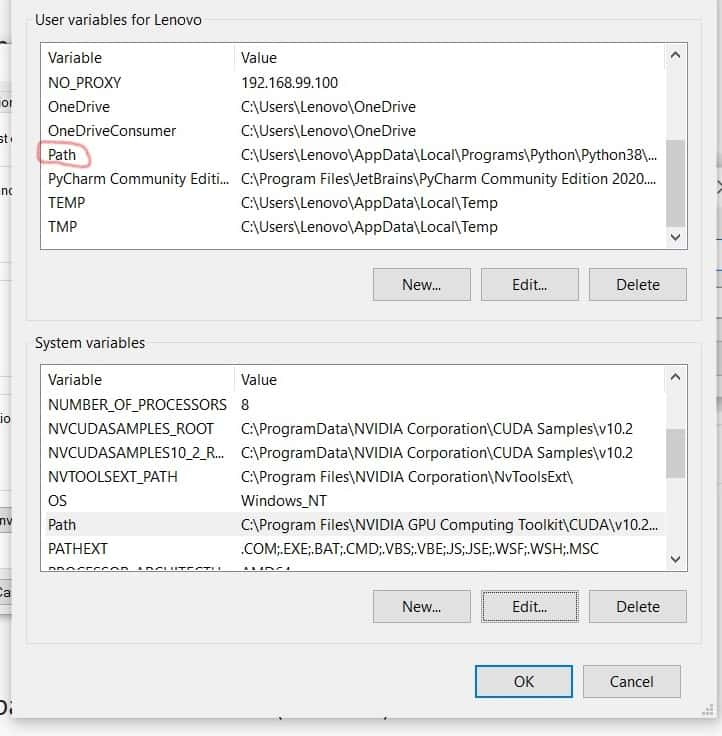

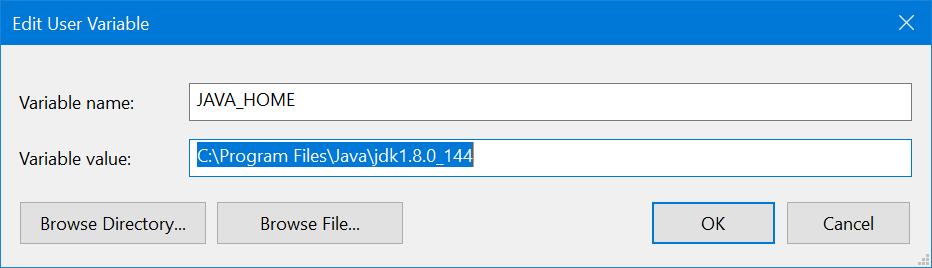

In order to fully take advantage of Spark NLP on Windows (8 or 10), you need to setup/install Apache Spark, Apache Hadoop, Java and a Pyton environment correctly by following the following instructions: How to correctly install Spark NLP on Windowsįollow the below steps to set up Spark NLP with Spark 3.1.2: RUN adduser -disabled-password \ -gecos "Default user" \ -uid $ Windows Support

Steps to install spark on windows 10 for jupyter notebook update#

RUN apt-get update & apt-get install -y \ tar \

Steps to install spark on windows 10 for jupyter notebook software#

To lanuch EMR cluster with Apache Spark/PySpark and Spark NLP correctly you need to have bootstrap and software configuration. NOTE: The EMR 6.0.0 is not supported by Spark NLP 3.4.3 How to create EMR cluster via CLI Spark NLP 3.4.3 has been tested and is compatible with the following EMR releases: Note: You can import these notebooks by using their URLs. You can view all the Databricks notebooks from this address: Please make sure you choose the correct Spark NLP Maven pacakge name for your runtime from our Pacakges Chetsheet Databricks Notebooks NOTE: Databrick’s runtimes support different Apache Spark major releases. Now you can attach your notebook to the cluster and use Spark NLP! Install New -> Maven -> Coordinates -> :spark-nlp_2.12:3.4.3 -> Install Install New -> PyPI -> spark-nlp -> Installģ.2. In Libraries tab inside your cluster you need to follow these steps:ģ.1. On a new cluster or existing one you need to add the following to the Advanced Options -> Spark tab: Install Spark NLP on DatabricksĬreate a cluster if you don’t have one already The only Databricks runtimes supporting CUDA 11 are 8.x and above as listed under GPU. NOTE: Spark NLP 3.4.3 is based on TensorFlow 2.4.x which is compatible with CUDA11 and cuDNN 8.0.2. Spark NLP 3.4.3 has been tested and is compatible with the following runtimes: Spark NLP quick start on Kaggle Kernel is a live demo on Kaggle Kernel that performs named entity recognitions by using Spark NLP pretrained pipeline. This way, you will be able to download and use multiple Spark versions.# Let's setup Kaggle for Spark NLP and PySpark !wget -O - | bash Select the latest Spark release, a prebuilt package for Hadoop, and download it directly. Install pySpark To install Spark, make sure you have Java 8 or higher installed on your computer. Spark is up and running! Now lets run this on Jupyter Notebook. Open the terminal, go to the path 'C: spark sparkin' and type ' spark-shell'.

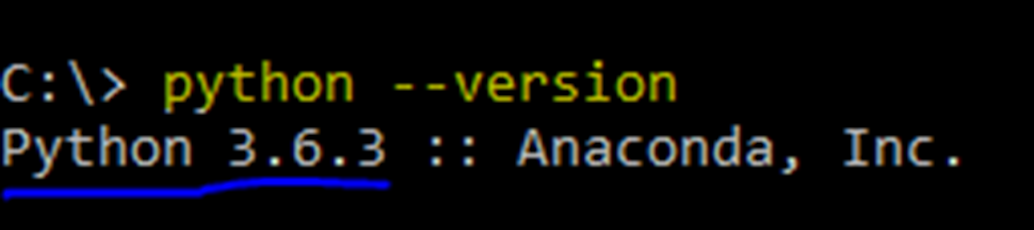

This should start the PySpark shell which can be used to interactively work with Spark.Īlso, how do I get spark from Jupyter notebook? One may also ask, how do I know if Pyspark is installed? To test if your installation was successful, open Command Prompt, change to SPARK_HOME directory and type bin pyspark.

0 kommentar(er)

0 kommentar(er)